The EU AI Act, described by many as the ‘GDPR for artificial intelligence’, is the first comprehensive attempt to regulate AI on a global scale. Its significance lies in how it reshapes both the industries deploying AI and the legal sector that advises them.

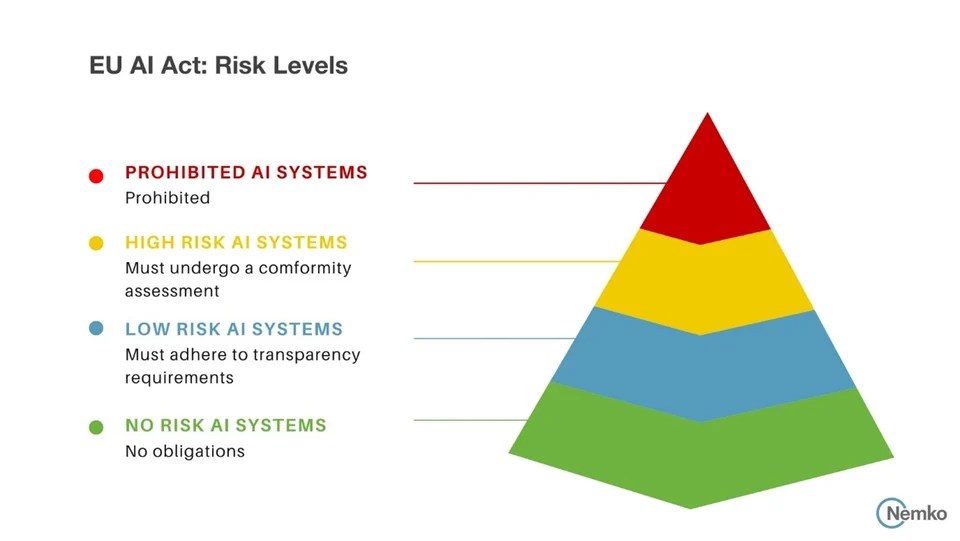

A Risk-Based Approach

At the heart of the Act is a tiered system:

- Unacceptable risk systems (such as real-time biometric surveillance or manipulative scoring tools) are banned outright.

- High-risk systems (like AI in recruitment, education, healthcare, or credit scoring) face strict obligations: risk management processes, transparency, audit trails, and human oversight.

- Limited risk systems (such as chatbots) must disclose they are AI-driven, but face lighter regulation.

This design reflects a pragmatic approach: regulation adapts to the context and potential harm of the system, rather than restricting innovation across the board.

Impact on Industry

For sectors like finance, healthcare, and manufacturing, the Act demands a fundamental rethink of how AI is procured and deployed. Businesses must carry out detailed risk assessments, document how their models operate, and introduce ongoing monitoring to remain compliant. Non-compliance carries penalties of up to 7% of global turnover, making AI governance a board-level issue.

Financial services (credit, insurance, AML): Many models used for creditworthiness/access to essential services are high-risk. Expect procurement checklists, bias testing, human-in-the-loop controls, and stronger vendor contracts (warranties, audit rights, allocation of compliance roles). Non-compliance risks supervisory findings and AI Act fines.

Employment & HR tech: AI used for screening, ranking or evaluating candidates is high-risk. Employers will need documented risk assessments, explainability protocols for decisions, and clear candidate notices. Vendors will be pressed for technical documentation and change-management commitments.

Healthcare & regulated products: If AI is a safety component of a product (e.g., a medical device software module), AI Act duties layer on top of existing product safety regimes with tailored timelines. Conformity assessments and post-market surveillance become central.

Biometrics & public-facing systems: Many biometric identification/analysis tools fall into high-risk, while certain practices are banned entirely. Deployers should reassess lawful bases, necessity/proportionality, and fallback processes.

Although compliance will be costly, the Act also offers clarity. Investors are more likely to back AI projects in Europe knowing there is a clear legal framework. In this sense, regulation can reduce uncertainty and create conditions for more sustainable innovation.

Impact on Law Firms

The implications for law firms are twofold. First, clients will need advice on classification, compliance programmes, and contract renegotiations with AI vendors. AI clauses in M&A transactions, financing agreements, and outsourcing contracts will become standard. Disputes teams will also be drawn into investigations and litigation arising from non-compliance.

Second, firms themselves are adopters of AI. Tools such as contract review software and legal drafting copilots are already in use. While these are unlikely to fall within the high-risk category, the Act’s emphasis on transparency and accountability will shape how firms govern their own use of such tools, especially when handling sensitive client data.

Looking Ahead

The EU AI Act signals a shift: AI governance is no longer voluntary or experimental, but mandatory and enforceable. Industries must integrate compliance into product development, and law firms must be ready to advise while ensuring their own practices align with the same principles.

Leave a comment